Deploying Multi-Node Kubernetes Cluster on AWS Using Ansible Automation

Today We will create Ansible Role to launch 3 AWS EC2 Instances and we will create Ansible Role to configure Docker over those instances.

Also, We’ll create a Role to configure K8S Master, K8S Worker Nodes on the above created EC2 Instances using kubeadm.

Pre-requisite:

- Basic knowledge of AWS EC2 instance, Ansible Role & Kubernetes Multi-Node Cluster.

- Virtual Machine with Ansible version 2.10.4 installed.

- AWS account

Let’s start running the commands & writing the codes…

Creating three Ansible Roles :

- Create one workspace, let’s say “aws-ansible”. Go inside this workspace & create one folder called “roles”. Now go inside this folder & run these below mentioned three commands.

ansible-galaxy init ec2

ansible-galaxy init k8s_master

ansible-galaxy init k8s_slave- Remember one thing that it will create three Ansible Role inside “aws-ansible/roles/” folder.

Setting up Ansible Configuration File:

In Ansible we have two kinds of configuration file — Global & Local. We gonna create one local configuration file inside “aws-ansible” folder & whatever Ansible commands we want to run in future we will run on this folder. Because then only Ansible will be able to read this Local configuration file & can work accordingly.

Creating AWS Key-pair & putting it in the Workspace :

Go to AWS => EC2 => Key-pair & there create one key pair — let’s say “ansible.pem”. Then download the key in your VM workspace. Finally, run…

chmod 400 ansible.pemCreating Ansible Vault to store the AWS Credentials :

Lastly on your workspace run…

ansible-vault create cred.yml- It will ask to provide one vault password & then it will open the VI editor on Linux, create two variables in this file & put your AWS access key & secret key as values. For example…

access_key: ABCDEFGHIJK

secret_key: abcdefghijk12345- Save the file. Now you are finally ready to write Ansible Roles.

Writing Code for ec2 Role :

Task YML file :

Go inside the folder “aws-ansible/roles/ec2/tasks/” & start editing the “main.yml” file.

Explanation of code(main.yml):

- First, we are using the “pip” module to install two packages — boto & boto3, because these packages have the capability to contact AWS to launch the EC2 instances. I used one variable called “python_pkgs” & the value of it is stored in the “aws-ansible/roles/ec2/vars/main.yml” file. I will also share that file after explaining this code.

- Next, I used the “ec2_group” module to create a Security Group on AWS. Although we can create one strong Security Group for our Instances, to make things simple I allowed ingress & egress in all the ports. But in a real scenario, we never do this.

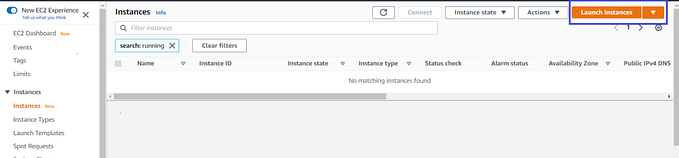

- Next, I used the “ec2” module to launch the instance on AWS, & here all the parameters are known to us. Only I want to talk about two parameters — first is “register” which will store all the Metadata in a variable called “ec2” so that in future we can parse the required information from it. The second is “loop” which again using one variable which contains one list. Next using the “item” keyword we are calling the list values one after another. This gonna run the ec2 module 3 times with different instance tags, which finally will launch 3 instances.

- Next, I used the “add_host” module which has the capability to create one dynamic inventory while running the playbook. Under this module, I used the “hostname” keyword to tell the values to store in the dynamic host group. Here I used that “ec2” variable & do the JSON parsing to find the public IP of 1st instance.

If you notice, here I created two host groups — ec2_master & ec2_slave & 1st instance belong to “ec2_master” & 2nd, 3rd instance belong to “ec2_slave” host group.

- Finally, I run the “wait_for” module to hold the playbook for few seconds till all the node’s SSH services started.

Vars YML file :

Open the “aws-ansible/roles/ec2/vars/main.yml” file & store all the variables that we mentioned on the “task/main.yml” file along with their respective values.

Writing Code for k8s_master Role :

Task YML file :

Similarly, like previous time open the “aws-ansible/roles/k8s_master/tasks/main.yml” file

Explanation of code:

- Here we need to install kubeadm program on our master node to set up the K8s cluster. So, for that, I’m adding the yum repository provided by the K8s community. Here as I’m using AWS Linux 2 for all the instances so we don’t need to configure the repository for docker CLI.

- Next using the “package” module we are installing “Docker”, “Kubeadm” & “iproute-tc” package on our Master Instance.

- Next, I used the “service” module to start the docker & kubelet service. Here again, I used the loop on the list called “service_names” to run the same module twice.

- Next, I used the “command” module to run one kubeadm command which will pull all the Docker Images required to run Kubernetes Cluster. Here in Ansible, we don’t have any module to run “kubeadm” command, that’s why I’m using the “command” module.

- Next, we need to change our Docker default cgroup to “systemd”, otherwise kubeadm won’t be able to set up the K8s cluster. To do that at first using the “copy” module we are creating one file “/etc/docker/daemon.json” & putting some content in it. Next again using the “service” module we are restarting docker to change the cgroup.

- Next using the “command” module we are initializing the cluster & then using the “shell” module we are setting up “kubectl” command on our Master Node.

- Next using the “command” module I deployed Flannel on the Kubernetes Cluster so that it creates the overlay network setup.

- Also, the 2nd “command” module is used to get the token for the slave node to join the cluster. Using “register” I stored the output of the 2nd “command” module in a variable called “token”. Now, this token variable contains the command that we need to run on the slave node so that it joins the master node.

- Lastly, I used the “shell” module to clean the buffer cache on my master node, because while doing the setup it gonna create lots of temporary data on RAM.

Vars YML file :

Open the “aws-ansible/roles/k8s_master/vars/main.yml” file & store the variable “service_name” with values in list…

Writing Code for k8s_slave Role :

Task YML file :

Similarly like previously open the “aws-ansible/roles/k8s_slave/tasks/main.yml” file

Explanation of code:

- Till docker service restart task, this file is exactly the same as the previous file of the “k8s_master” role. On the slave node, we don’t need to initialize the cluster & also we don’t need to set up kubectl. Rest things we need to do because in slave node also we need “kubeadm” command & Docker as container engine.

- Next, I used the “copy” module to create one configuration file called “/etc/sysctl.d/k8s.conf” which will allow the slave to enabled certain networking rules.

- Next, to enable the rules we need to reload the “sysctl” & for that, I used the “command” module.

Now here comes the most interesting part…

- If you can remember we used the “token” variable in our previous role to store the token command for the slave to join the cluster. Now each role has its own separate namespaces to store the variables. So we need to go to the namespace of the previous host group that we created dynamically.

- For that, we use “host vars” keyword & inside it, we call the “ec2_master” host group. Next in this host group, we can have multiple hosts (nodes). To pick the 1st host we use the “[0]” option. That means finally “hostvars[groups[‘ec2_master’][0]]” option is calling the namespace of the master node.

- Next using “[‘token’][‘stdout’]” we just parsed the command that we can use in slave to join the master node.

Note : Here again we need to create the same “vars/main.yml” file that we created on “k8s_master” role.

Finally, Create the Setup file :

- Now it’s finally time to create the “setup.yml” file which we gonna run to create this entire infrastructure on AWS. Remember one thing that we need to create this file inside the folder “aws-ansible”.

- Here as you can see, we are running the first “ec2” role on our localhost because it gonna contact to AWS API from our localhost. Also using “vars_files” I included the “cred.yml” file in this task so that the “ec2” role can access it.

- In the next two steps, we are running the “k8s_master” & “k8s_slave” roles on the “ec2_master” & “ec2_slave” dynamic hostgroup respectively.

GitHub Repository for Reference :

https://github.com/srasthychaudhary/Kubernetes-Multi-Node-AWS-Ansible

That’s all for the coding part. Now it’s time to run the playbook. For that run the below-mentioned command in “aws-ansible” folder.

ansible-playbook setup.yml --ask-vault-pass- Next, it will prompt you to pass the password of your Ansible Vault (cred.yml file), provide the password & then you will see the power of automation…

Final Words :

- There are endless future possibilities of learning Ansible, Kubernetes, AWS. This is just a simple demo of Ansible Role, but if you want you can create a bigger infrastructure by adding more modules. Each and every kind of configuration we can achieve using Ansible.

Thank you for reading.