Integration All Concepts using Python

Main-Script

Firstly the main script will help u to choose on which technology you want to work on:

- Redhat Linux OS

- Docker containers

- Hadoop

- AWS

Redhat Linux os

Red Hat® Enterprise Linux® is the world’s leading enterprise Linux platform.* It’s an open-source operating system (OS). It’s the foundation from which you can scale existing apps — and roll out emerging technologies — across bare-metal, virtual, container and all types of cloud environments.

Linux can serve as the basis for nearly any type of IT initiative, including containers, cloud-native applications, and security.

By Linux Script you can see date & calender, create a directory, see your memory details, see your Hard Disk details, setup webserver, check the connection, change to the root user, install any software, start any service or daemon, Reboot your machine and shut down your machine by just pressing 1, 2 and 3.

Docker Container

A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings.

Container images become containers at runtime and in the case of Docker containers — images become containers when they run on Docker Engine.

With the help of python and docker integration, you can check the status of docker, check docker info, check the images available, check the containers launched and launch a container by just pressing 1and 2.

Hadoop

A Hadoop cluster is a special type of computational cluster designed specifically for storing and analyzing huge amounts of unstructured data in a distributed computing environment.

Typically one machine in the cluster is designated as the NameNode and another machine as the ResourceManager, exclusively. The rest of the machines in the cluster act as both DataNode and NodeManager. These are the workers.

Configuring Hadoop in Non-Secure Mode

Hadoop’s Java configuration is driven by two types of important configuration files:

Read-only default configuration — core-default.xml, hdfs-default.xml, yarn-default.xml and mapred-default.xml.

Site-specific configuration — etc/hadoop/core-site.xml, etc/hadoop/hdfs-site.xml, etc/hadoop/yarn-site.xml and etc/hadoop/mapred-site.xml.

Additionally, you can control the Hadoop scripts found in the bin/ directory of the distribution, by setting site-specific values via the etc/hadoop/hadoop-env.sh and etc/hadoop/yarn-env.sh.

To configure the Hadoop cluster you will need to configure the environment in which the Hadoop daemons execute as well as the configuration parameters for the Hadoop daemons.

HDFS daemons are NameNode, SecondaryNameNode, and DataNode.

Integration of python and Hadoop is done as just by giving the details about namenode, data node and client you can configure and start the nodes automatically. A complete cluster is formed.

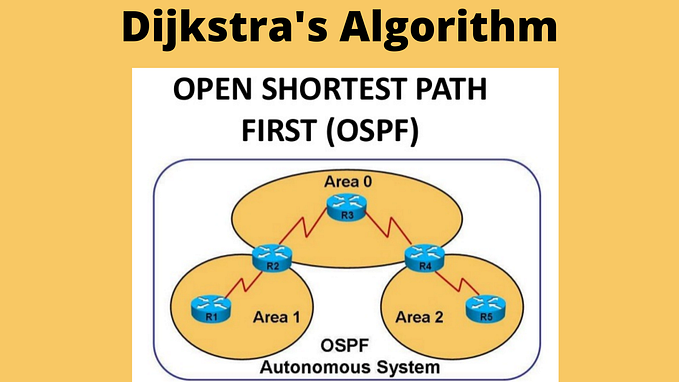

Amazon Web Services

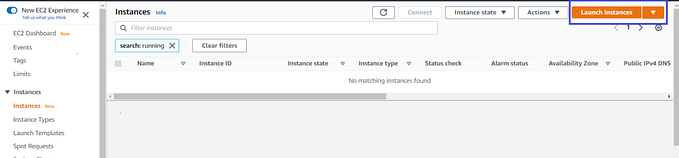

Amazon Web Services (AWS) is a subsidiary of Amazon providing on-demand cloud computing platforms and APIs to individuals, companies, and governments, on a metered pay-as-you-go basis. These cloud computing web services provide a variety of basic abstract technical infrastructure and distributed computing building blocks and tools. One of these services is Amazon Elastic Compute Cloud (EC2), which allows users to have at their disposal a virtual cluster of computers, available all the time, through the Internet. AWS’s version of virtual computers emulates most of the attributes of a real computer, including hardware central processing units (CPUs) and graphics processing units (GPUs) for processing; local/RAM memory; hard-disk/SSD storage; a choice of operating systems; networking; and pre-loaded application software such as web servers, databases, and customer relationship management (CRM).

The AWS technology is implemented at server farms throughout the world, and maintained by the Amazon subsidiary. Fees are based on a combination of usage (known as a “Pay-as-you-go” model), hardware, operating system, software, or networking features chosen by the subscriber required availability, redundancy, security, and service options. Subscribers can pay for a single virtual AWS computer, a dedicated physical computer, or clusters of either.

By python and AWS integration you can automate AWS.

By just pressing few numbers you can Create Key Pair, Create Security Group, Add Ingress Rules to Existing Security Group, Launch Instance on Cloud, Create EBS Volume, Attach EBS Volume to EC2 Instance, Configure WebServer, Create Static Partition and Mount /var/www/html folder on EBS volume, Create S3 Bucket, Object inside S3 bucket and make it public accessible, to remove specific Object from S3 bucket, delete Specific S3 Bucket, create Cloudfront distribution providing S3 as Origin, delete Key Pair, Stop EC2-Instances, Start Ec2-Instances, terminate Ec2-Instances, delete Security group and Go back to the previous menu.

and here's our first team task is completed!

THANKS to Vimal Sir for sharing his knowledge, time and support.

This would not have been possible without the support of my team so thanks to everyone Ashish Dwivedi Abhishek Sharma Ankit Pramanik KRUSHNA PRASAD SAHOO